Cisco ACI deployment options

CISCO ACI deployment options

The Cisco ACI platform is one which can be designed and deployed in a number of different ways. There is no strict right or wrong deployment model but there will be a model that is best fit for a set of requirements.

We hope that this post can help you make an informed decision about how to deploy Cisco ACI in the way that is right for your requirements.

Cisco ACI has been around for a while now and within the enterprise space, we have seen the solution develop from just an independent datacenter platform to a feature rich solution with capabilities that continue to be enhanced thanks to the hard work of Cisco’s datacenter (Insieme) business unit.

Our engineers have seen many different datacenters over the years. Some good, some not so good, but no matter what we as individuals think, they have evolved in their own way for good reason. The evolution of the datacenter is not always driven by technology for the sake of technology but more often by business requirements.

“Business requirements and demand”

The trouble with business requirements and demand is that the existing network is not always ready to support the use case that your new business critical applications require and the last thing you can do is delay the deployment of an application that is going to make the business money. That’s when we deploy solutions that start to push the boundaries of the original design with configurations that sometimes give operational teams sleepless nights fixing.

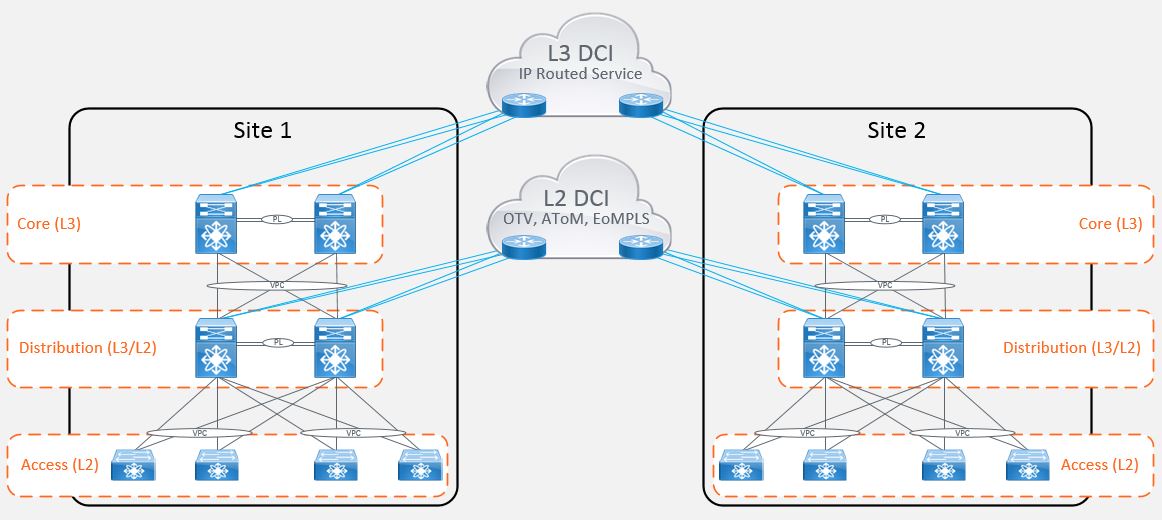

The most common ‘workaround’ being the stretching of layer 2 boundaries between datacenters to provide an active/active state for workloads, compute and storage where devices need to share the same IP subnet in order to operate. Now there were many different ways of achieving this when the requirement first hit the scene. It was often a ‘VPLS’ or ‘AToM’ circuit which doesn’t isolate the spanning-tree topology. Then there was ‘Overlay Transport Virtualization’ (OTV) and ‘FabricPath’. The list goes on but ultimately what all of these options provided was a level of complexity when it came to supportability.

Cisco ACI was designed to address some of these issues by abstracting much of the complex configuration and hiding it under the hood. This is done by provisioning data center connectivity using a software defined network approach. This allows you to programmatically configure your network in a uniform manner.

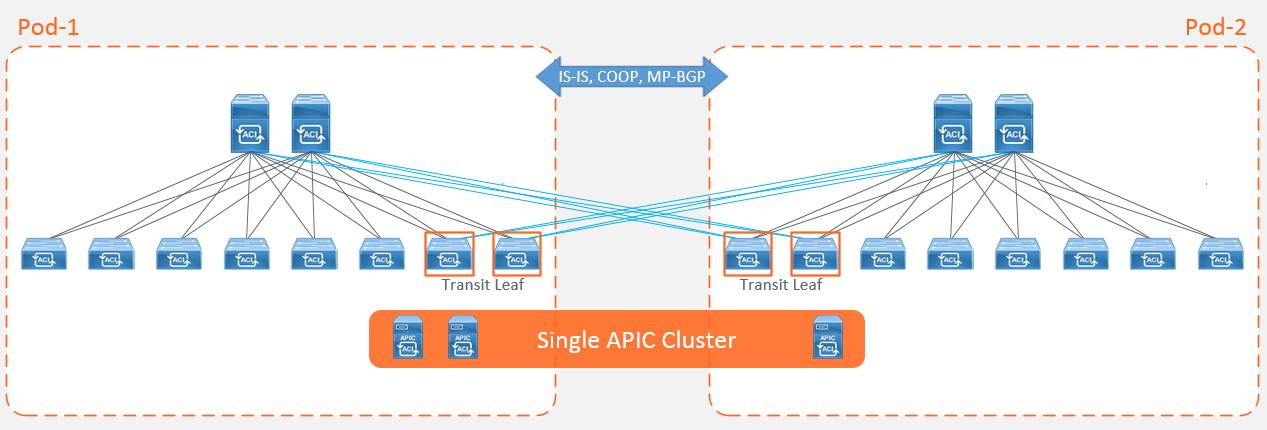

When Cisco ACI first hit the market, the number of deployment models available were limited. To achieve an active/active data center with workload mobility, the only option available was referred to as ‘Stretched Fabric’. This model took a single fabric with a single APIC cluster and stretched it across multiple datacenter locations. The fabric connectivity consists of a partial mesh of leaf and spine switches whereby not every leaf is connected to every spine as per the traditional Clos topology.

The main advantages of this deployment approach were that businesses could maintain end-to-end consistent policy. Whilst there were a number of disadvantages, some of the main considerations were around the scalability of the fabric and this approach meant that fault domains were extended between locations. It also meant that some leaf switches had to be dedicated to the transit function (to interconnect leaf and spine switches), and therefore could not be used to host compute functions.

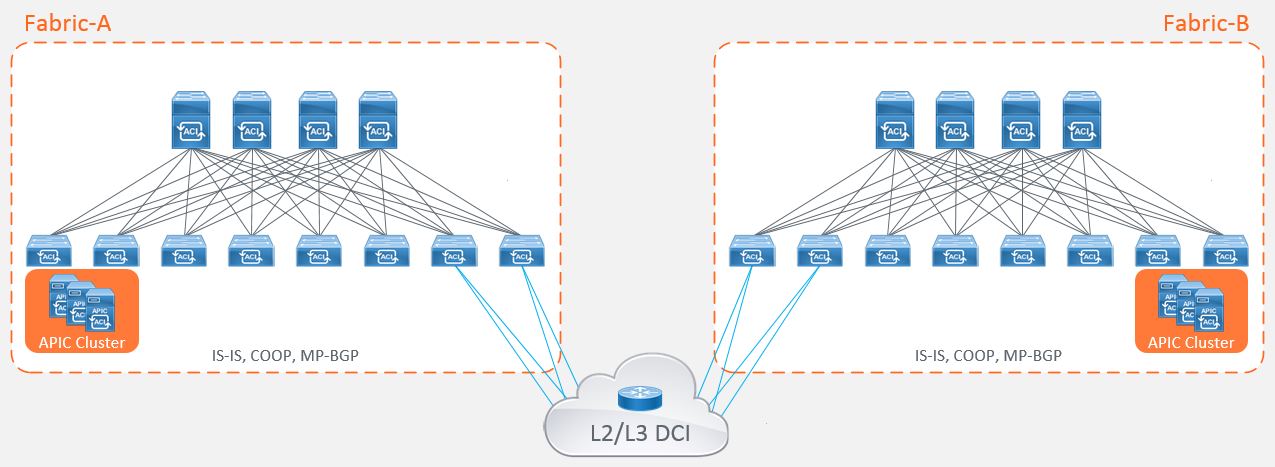

The other option was to deploy two separate fabrics, known as a ‘dual fabric’ topology. This model hosts independent APIC clusters within each datacenter to provide fault domain isolation from the network stack but with the emphasis on achieving active/active or active/standby applications through the capabilities of complimentary technologies within the network such as DNS load balancing. Workload mobility between data centers isn’t a function that this topology supports out of the box without extending the layer 2 constructs across the DCI as per legacy models which many people will want to avoid.

The main advantage of this deployment approach were that businesses could achieve scalability whilst providing a level of fault domain isolation. The main disadvantage of this approach is that end-to-end policy is difficult to achieve with the administrative overhead of having to configure each fabric independently. Admittedly, the administrative burden of replicating policy can be overcome through automation and orchestration, but many businesses do not have this functionality in place within the network space prior to adopting ACI.

With these deployment models available, network architects and engineers had to take all of the following considerations into account before deciding which deployment model is suitable:

- Dedicated fabric hardware

- Fault domain isolation

- Latency

- Scalability

- Consistent End-to-End Policy

- Existing Datacenter cabling topologies

Cisco listened to the considerations of its customers and produced the first major enhancement to the ACI stretched fabric deployment option within the datacenter.

"Cisco ACI Multi-Pod"

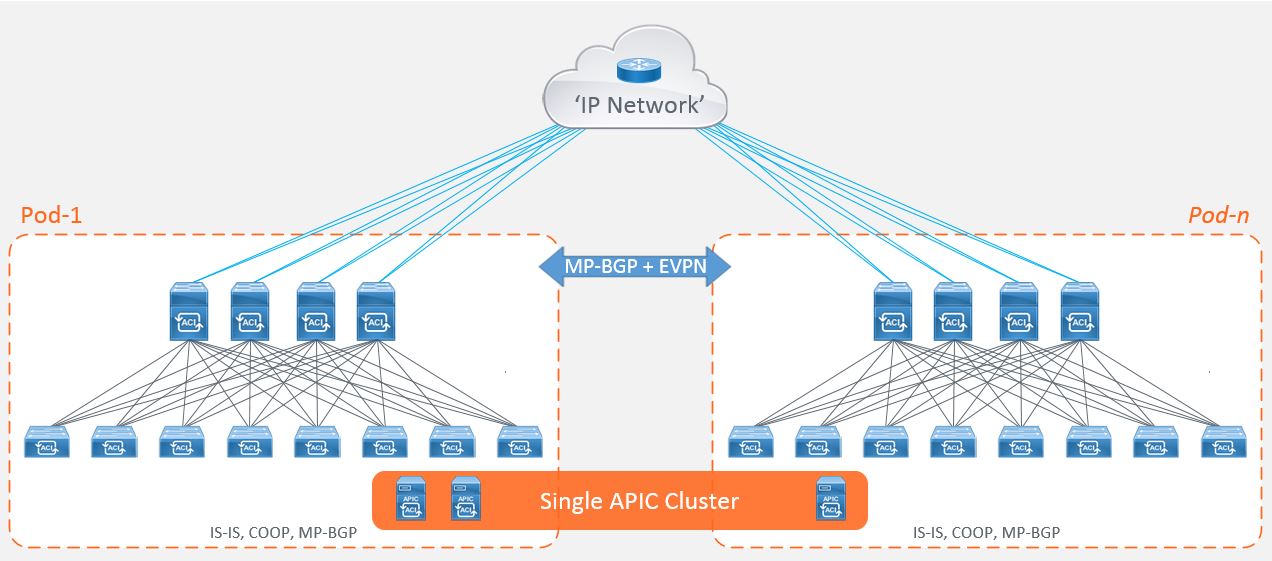

This option gave its customers the ability to address some of the inefficiencies of the original stretched fabric design and to make use of those spare ports in their spine switches for connectivity to a common ‘IP network’ (also known as an IPN). The IPN is an L3 routed multicast interconnect between pods allowing you to achieve an active/active datacenter topology (for those stretched subnets) without the requirement for the dedicated leaf roles and addressing scalability considerations, for example, the number of supported leaf nodes.

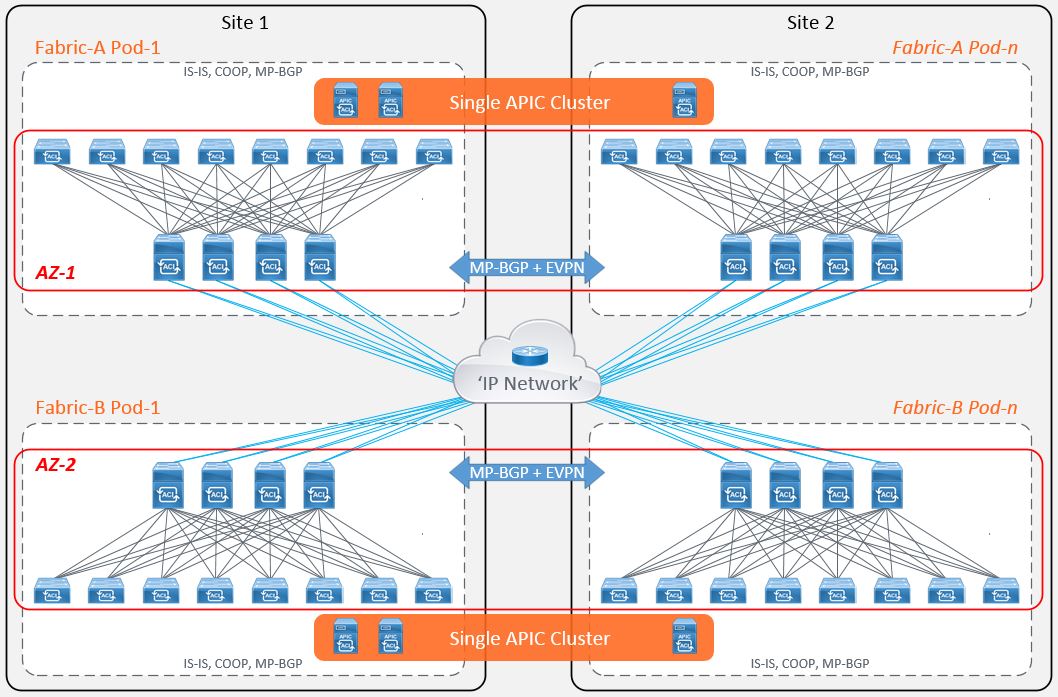

The Cisco ACI Multi-Pod enhancement is the natural evolution of the stretched fabric design that operates by breaking your fabric into ‘Pods’ whilst still using a single APIC cluster. These ‘Pods’ are then interconnected by the routed IPN.

Each pod consists of its own leaf and spine switches, excluding the controllers and operates its own control-plane (ISIS instance and COOP database) providing a level of fault domain isolation, whilst providing consistent end-to-end data-plane policy for traffic forwarding.

A popular deployment consideration that drives this choice of deployment might be physical cabling constraints between data halls within the same datacenter, or a large number of top of rack switches that exceed the leaf scalability limits for a single pod.

One of the main advantages of the Multi-Pod design is the deployment of policy from one APIC cluster to one or more pods. However, this feature may also encourage engineers to consider alternative designs as the pods are treated as a single ‘change domain’ which is analogous to a public cloud availability zone. This means that a configuration error, for example deleting a tenant, would be pushed to all pods, so speed and flexibility of policy deployment comes at a cost. This consideration has driven some customers to deploy multiple Multi-Pod fabrics across the same datacenter locations.

More recently, Cisco released the next evolution on the ACI roadmap to address the concerns of interconnecting dual fabrics and providing you with the ability to provision common policy across the separate previously independent fabrics.

"Cisco ACI Multi-Site"

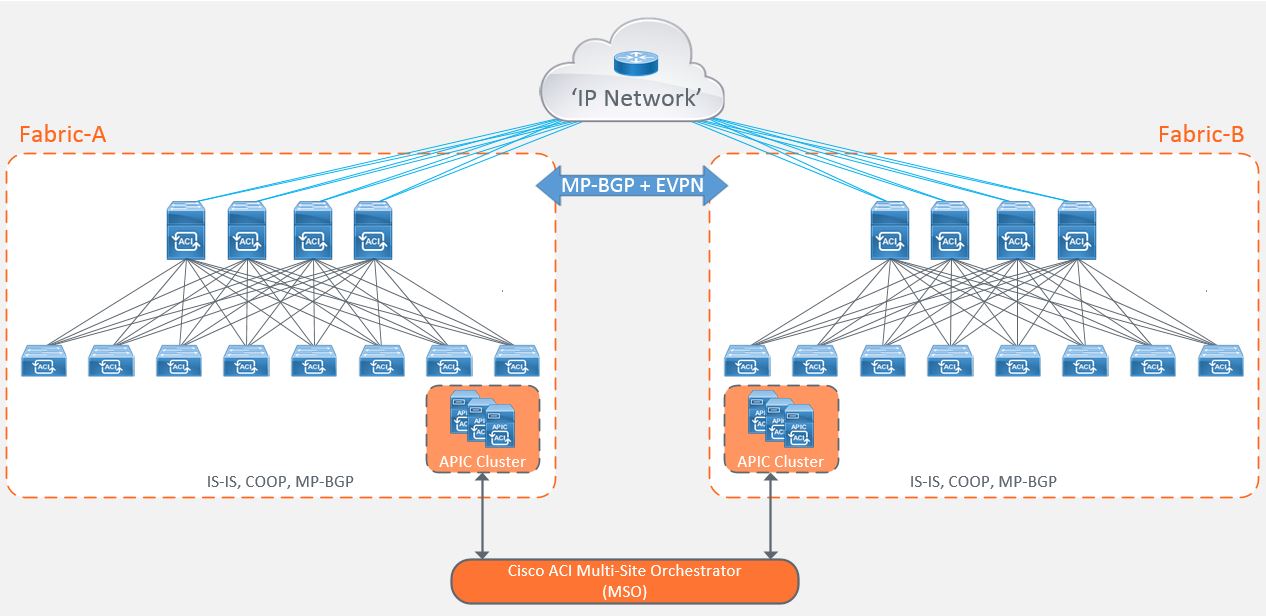

Cisco Multi-Site is the natural evolution of the ‘Dual Fabric’ design that takes on some of the characteristics of a Multi-Pod architecture. This includes active/active stretched bridge domains but with a number of fault domain, change domain and scalability enhancements with the ability to push policy from a single point. Again, taking on board the concept of ‘regions’ from the public cloud providers, Multi-Site works by treating each fabric as a site. Each site is managed by its own local APIC cluster with its own control, management and data-plane logic. It still has the requirement to connect to the IPN, although this time it only requires a unicast topology to interconnect the different sites with each other.

Now that each site is controlled by its own local APIC cluster, this allows the site to maintain its own autonomy from a policy administration perspective, but this doesn’t solve the problem of having to configure each controller cluster separately as is the case with the dual fabric design.

To address this, Cisco introduced the ‘Multi-Site Orchestrator’ (MSO) which is used to program policy and forwarding logic into the APICs and spine switches at each site and provide reachability and consistent end-to-end policy through the use of different External Tunnel Endpoint (ETEP) addresses for each site within the IPN.

The MSO allows policy to be configured once and deployed to different sites at different times. This solution offers change domain isolation but with central policy management.

The most common deployment considerations that drives this choice of deployment might be the ability to deploy the same application across your datacenters that are geographically separated, such as US, EMEA and APAC. Alternatively, you may have 3 datacenters, two active and one ‘Disaster Recovery’ (DR) site, and you want to ensure that policies applied to the 2 active DCs are the same as those deployed within the DR site where you want to ensure that business continuity applications are readily available.

Briefly returning to the original statement around how implemented network designs can constrain business requirements; this is something that the ACI platform is addressing. The different deployment models mentioned earlier aim to provide a single or multiple fabrics that facilitate any business requirement without having to rearchitect the network.

After our recent visit to Cisco Live, we were given a number of insights in to the up and coming roadmap, one of the interesting options is the ability to co-exist Multi-Pod and Multi-site within the same deployment, as well as some key enhancements to the already established Multi-Pod feature.

We hope you enjoyed the article. As and when we know more, we’ll update our community with another post.

BestPath.